By Paul E. Ceruzzi (2010)

Pages: 445, Final verdict: Great-read

There are currently more than 22 billion computing devices in the world. They are everywhere, in laptops, smartphones, cars, and are used in all areas of the economy. But unlike many of the technologies we use today, computers are a fairly recent invention.

Paul E. Ceruzzi, curator emeritus at the Smithsonian's National Air and Space Museum in Washington, D.C is one of the world's top experts on the topic. He has published multiple books about the Internet, the GPS and navigation systems and the history of computers.

I recently picked up the second edition of A History of Modern Computing, the 2003 adaptation of the original 1998 book, where Ceruzzi masterfully retells the first 50 years of commercial computers, from 1945 until the early days of the internet.

Computer history, bit by bit

History is often hard on the nay-sayers. In 2007 Steve Ballmer dismissed the iPhone as a "$500 subsided item" that would not get significant market share. In the late 40s, Howard Aiken, famous mathematician and builder of Mark I general-purpose calculator, did not believe there would be a commercial market for computers.

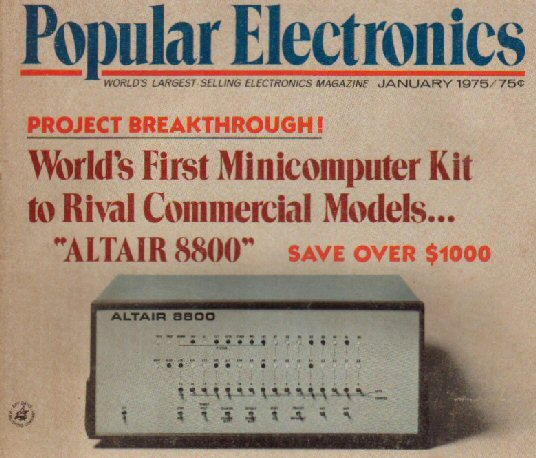

Both turned out to be wrong. Starting in 1975 with the MITS ALTAIR 8800, the market for commercial computers took off in the 70s and 80s. As for the iPhone, besides a tremendous market success - Apple sold 217 million iPhones in 2018 - it became a cultural icon that changed the way we communicate, work, and play.

But none of them were obvious. More than just another history book, this is what A History of Modern Coputing is about. The unconventional, non-obvious first 60 years of commercial computers, from its humble beginnings in the aftermath of World War II with the UNIVAC to the early days of the Internet in the late 90s. Written for computer enthusiasts, the book is as much about the ins and outs of the evolution of computers - architecture, components, languages - as it is about the companies, rivalries and the people who "willed [the computer age] into existence"

In 1959 the Internal Revenue Service of the United States wanted to computerise its operations. It chose IBM to supply the government agency with the mainframe computers it needed to modernize its tax collection processes. In 1950 it was NASA, who also chose IBM - 2 IBM 7090 computers sold at the equivalent of $20m today - this time to perform real-time calculation of rocket trajectories for the space program. Time and again, government (and military in particular) spending was the forcing function that accelerated innovation in computing. It provided private companies with capital and production capacity to reach economies of scale, dropping unit costs low enough that made personal computers were possible.

Ceruzzi argues that the convergence of hardware advances by "semiconductor engineers with their ever-more-powerful microprocessors and ever-more-spacious memory chips" with software developments in time-sharing systems that introduced more people to computing made the 1970s the ripe age for the personal computer. I loved to read about all the parallel companies, stories and people that were involved in shaping what computers are today. Companies that sold computer time to the public, hacker groups that traded tips on how to use their programmable Texas Instruments and HP calculators and the start of Microsoft all happened in this decade.

But perhaps the most fascinating story of all is of Gary Kildall, the creator of the most popular operating system used by the early hobbyists of personal computers, and who many argue should have been IBM's obvious choice to develop the operating system for the IBM PC. But IBM ended up going with Bill Gates and Miscrosoft, and the "rest is history". If nothing else, it is fun to imagine an alternative universe where Microsoft did not win the IBM deal - the defining moment that started MSFT's market dominance- and that Microsoft we know today might have never existed.

There is so much more packed in the book's +400 pages. Stories of the IBM PC and clone market, the rise of productivity software with the first spreadsheets and how a small project by Advanced Research Projects Agency that connected four research institutions in the US was the spark that gave birth to the Internet.

Bottom line

You won't find The History of Modern Computing in your run-of-the-mill book store, or in any reading recommendations of popular media publications. It probably sits quietly in the library of the computer science department of universities around the world.

But I'd argue it shouldn't. For those curious about technology and willing to endure a bit of talk about circuits, core memory, diagrams of operating systems and other "computery things", The History of Modern Computing is a rich, rewarding book. It is most likely the best book in the world about history of computing, and its focus on the early decades of computers bring dozens of names, companies and stories most of us have never heard of.

It also reminds us of how recent the field is. It is genuinely exciting to read how, in short amount time, computers went from room-sized machines that could handle pretty raw calculations to ubiquitous devices that took us to the moon, bring us closer to our loved ones and can save our lives.